016 - Text Recognition, Large Models and Expectations

Since the boom around ChatGPT almost a year ago, I've heard several people wondering if "tools like ChatGPT" were more efficient than HTR models trained with Kraken and the like. The glimmer of hope in their eyes was most likely lit by their own struggle to set successful and/or efficient HTR campaigns with more traditional tools. The capacity of Large Language Models (LLMs) to reformulate a text1 or, more specifically, of Large Multimodal Models (LMMs) to generate text based on a visual input may indeed lead people to believe that HTR technologies built on CNNs are on the verge of being flipped upside-down.2

Annika Rockenberger recently conducted a series of small experiments on the matter and wrote an interesting blog post about it. Let's summarize it!

She signed up for a premium subscription (25$/mo) to be able to chat with GPT4, which allows users to upload images. Then she submitted printed or handwritten documents she would normally transcribe with Transkribus and assessed the results. She found that GPT4 was fairly good on ancient print (German Fraktur) and that it was even able to follow transcription guidelines if provided with an example. However on a letter bearing handwritten cursive, the model completely hallucinated the content and attempted a transcription in the wrong language. This didn't change when she provided more context on the document. Rockenberger concludes that there is a potential for using ChatGPT for HTR but that the capacity of scaling it up is completely unsure and that learning how to provide good prompts to get the appropriate results is a challenge. I would also add that in the end, Rockenberger paid 25$ to get 10 lines of raw text, whereas with software like Transkribus or eScriptorium, she would also get a standard structured output.

So, in other words, after reading Rockenberger's post, one can conclude that GPT4 (or, better, similar free and open source models) does have a potential for "quick and dirty-ish" OCR. However, I would argue that users tempted by this strategy might still miss an important point: even LMM-based tools will requires a little bit of organization and precision from the users. This, I find, often lacks in unsuccessful HTR campaigns. LMMs could generate a good output, but you will likely have to pay a counterpart one way or the other(s): with lower text recognition quality, with hallucinated text content, with impoverished non-structured output, with premium fees, etc.

Earlier this year, an article proposed by Liu et al. (2023), "On the Hidden Mystery of OCR in Large Multimodal Models", explored almost exactly the same topic but in a more comprehensive way. Their article presents an extensive survey of how well several Large Multimodal Models (LMMs) performed on "zero-shot" tasks.

Zero-shot refers to the act of requesting an output from an LLM or a LMM without training it for this task in particular. It is very similar to Rockenberger's first attempt with GPT4, when she uploaded the image of a printed document and asked for its transcription. In such a case, she relied on the capacity of the model to transfer its knowledge to the specific tasks of Text Recognition, on a specific type of documents (historical printed text).

Other terms are often associated with "zero-shot:" "one-shot" and "few-shot". One-shot is equivalent to Rockenberger's second attempt: when she showed GPT4 an example of the output she expected on the 10 first lines of the documents, and requested that the model copied her strategy to generate the transcription of the 10 next lines. Few-shot would mean showing several pages and several expected output to the model before asking for the transcription of a new document.3

The paper focused on currently available LMMs representing five different approaches for training LMMs:

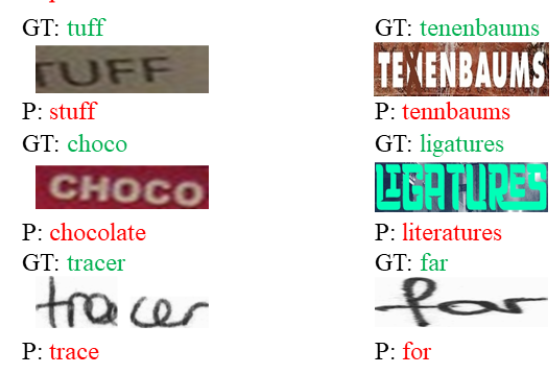

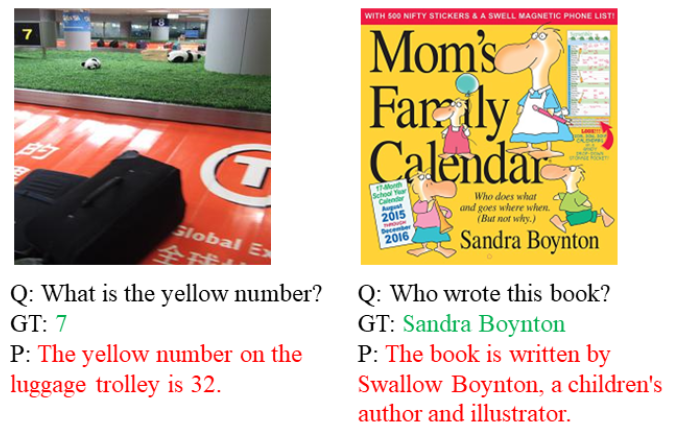

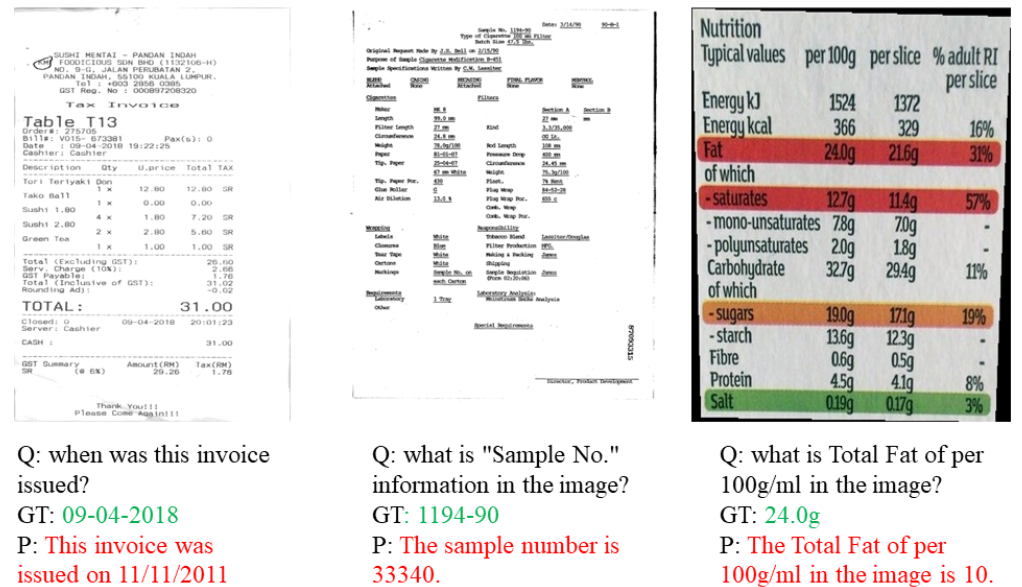

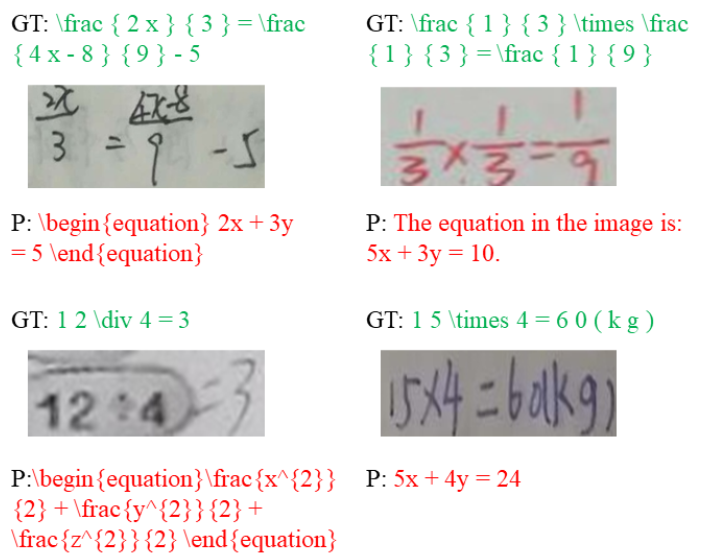

They evaluated the models on 4 tasks: text recognition, text-based visual question answering, key information extraction and handwritten mathematical expression recognition. Here are a few examples of what these tasks entail, as illustrated in the original article (on the images, P stands for Prediction and GT for Ground Truth):

| Task | Example |

|---|---|

| Text Recognition |  |

| Visual Question Answering |  |

| *Key Information Extraction |  |

| Handwritten Mathematical Expression Recognition |  |

For each task, they used several datasets presenting different challenges. For each of these datasets and tasks, they retrieved the scores of the state-of-the-art (sota) for supervised methods and used them as a baseline. For example, for text recognition on the IAM dataset, the sota method of AttentionHTR4 reaches a word accuracy of 91.24%.5 In comparison, Liu et al provide the following scores for the tested LMM on this dataset:

| test LMM | Score on IAM |

|---|---|

| BLIP-2 OPT6.7b | 38.00 |

| BLIP-2 FlanT5XXL | 40.50 |

| OpenFlamingo | 45.53 |

| LLaVa | 50.40 |

| MiniGPT4 | 28.90 |

| mPLUG-Owl | 42.53 |

| --------------- | ----- |

| Supervised SOTA | 91.24 |

The illustrations provided by the article are all of failed attempts, but it corresponds to the overall impression conveyed by the results of the experiments. Indeed, compared to the state-of-the-art supervised methods, zero-shot tasks prompted to LMMs yield results largely outperformed, similar to what is visible in the case of text recognition on the IAM dataset. The only exception is BLIP-2 on a Text Recognition task on a dataset of artistic text (WordArt) which is more challenging. The authors consider that this is a sign that LMMs have a promising potential for visually complex texts.

A very important section of their paper is their remarks on the relationship between LMMs and semantics. Submitting non-word images to the LMMs, they find that the LMMs systematically over-correct the prediction and suggest real-words as an answer. Traditional text recognition approaches, on the other hand, are much less sensitive to the notion of likelihood for the words to recognize. Similarly, the need for semantics interferes with the LMMs' output, and they tend to more easily recognize common words and make up additional letters ("choco" is read as "chocolate"). Lastly, LMMs are insensitive to word length: they are unable to count how many letters are in the image of a word. These results are similar to what Rockenberger experienced with the handwritten letter: the model hallucinated words to compose a semantically plausible letter. But using the wrong date, the wrong names, and the wrong language.

Liu et al conclude their paper reminding us that they experimented with the capacities of the models in the context of zero-shot prompts, whereas there are already successful attempts at fine-tuning LLMs and LMMs on specialized tasks, such as medical prediction. In fact, I think there already exist such attempts in the context of HTR as well: it seems to be the ambition of a model like Transkribus' Text Titan, released at the beginning of the Summer. It is based on a Transformer coupled with an LLM. Unfortunately, I wasn't able to find more information on this model, aside from the community-oriented communications released by Transkribus on their website (here and here).

-

In stead of a multimodal approach, Salvatore Spina explored the possibility to use a LLM-based tool like ChatGPT3 to post-process the result of HTR and correct the text. See: Spina, S. (2023). Artificial Intelligence in archival and historical scholarship workflow: HTS and ChatGPT (arXiv:2308.02044). arXiv. arXiv.2308.02044. ↩

-

Multimodality is presented by some researchers of the Digital Humanities community as a real epistemological turn for the field. See for example: Smits, T., & Wevers, M. (2023). A multimodal turn in Digital Humanities. Using contrastive machine learning models to explore, enrich, and analyze digital visual historical collections. Digital Scholarship in the Humanities, fqad008. doi: 10.1093/llc/fqad008 ; or Impett, L., & Offert, F. (2023). There Is a Digital Art History (arXiv:2308.07464). arXiv. arXiv.2308.07464. ↩

-

There are a few videos offering more or less detailed explanations on these expressions in the context of prompting an LLM. However, this is not specific to LLM, it is often used in the context of classification or NLP tasks for example. ↩

-

Kass, D., & Vats, E. (2022). AttentionHTR: Handwritten Text Recognition Based on Attention Encoder-Decoder Networks (arXiv:2201.09390). arXiv. arXiv.2201.09390. ↩

-

In this case, the WER is used as a baseline to compare different approaches. However, in general, it is not a good idea to only take into account Word accuracy to understand a model's performance in real life. This is something I discussed in this post. ↩